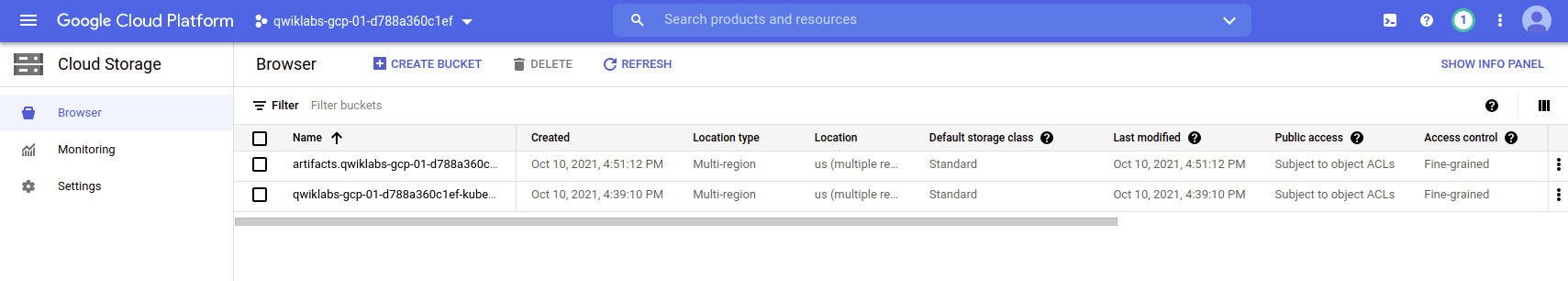

Continuous Training with TFX and Kubeflow Pipelines

In this post I will be exploring the TFX and its integration with Kubeflow Pipelines on Google AI Platform.

This post is kind of my summarization for my learning purpose.

1. Dataset

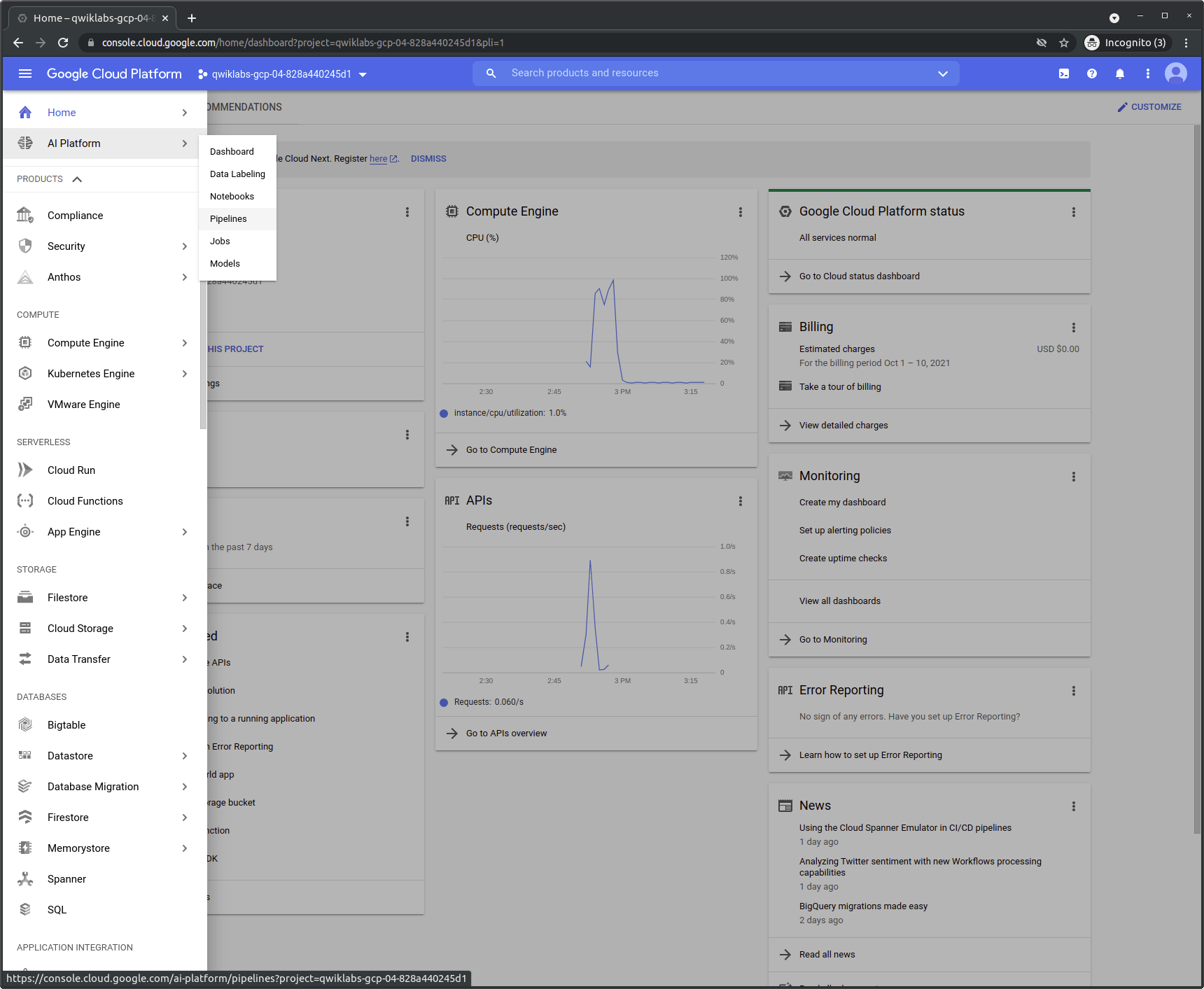

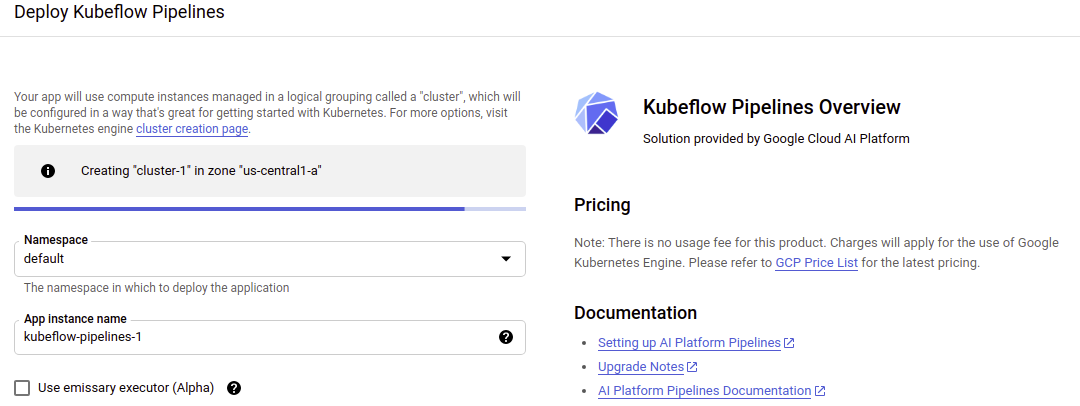

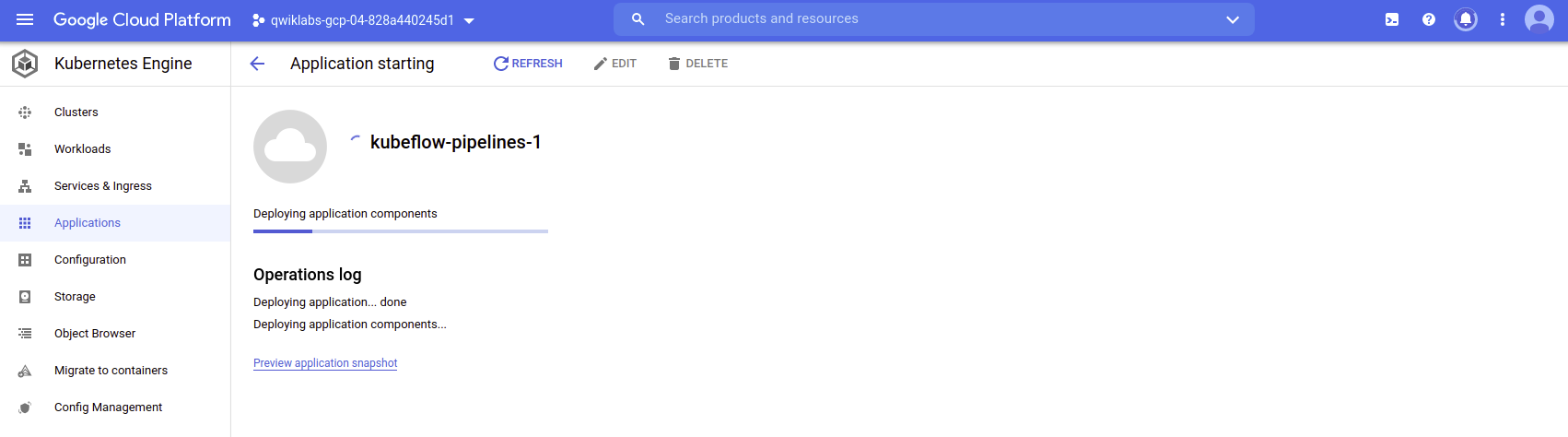

2. Create Clusters

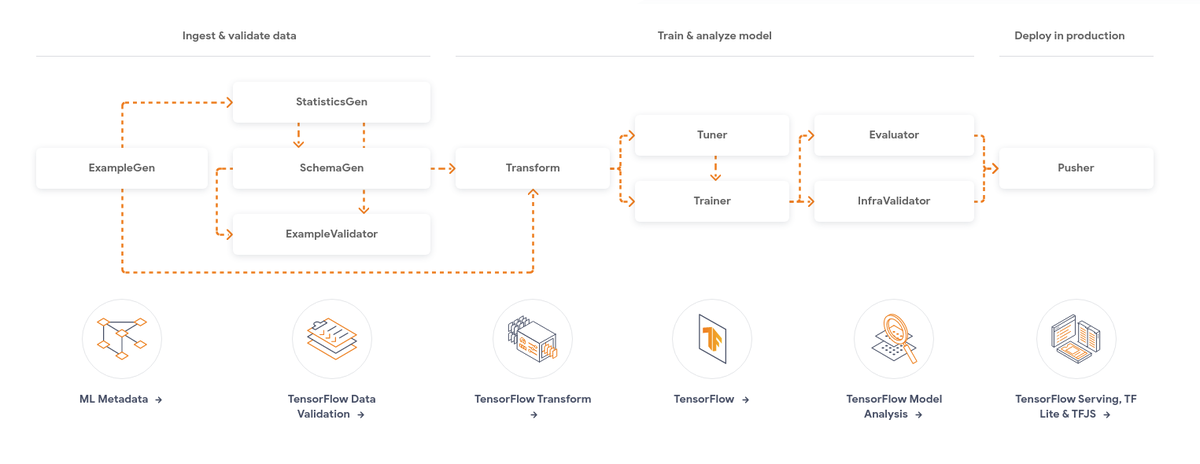

3. Understanding TFX Pipelines

In order to understand TFX pipelines, we need to understand some keywords. For full tutorial, refer to TensorFlow’s article here.

Artifact

Artifacts are the output of the steps in a TFX pipeline. They can be used by subsequent steps in the pipeline.

Artifacts must be stongly typed with an artifact type registered in the ML Metadata store. This point is not very clear yet; I need to research and will come back to expand more on this later.

Questions

- Where does artifact get stored?

- What needs to be changed if we run the pipeline on a Cloud?

Parameter

Parameters are something that we can set through configuration, instead of hard coding; they are just like the hyperparameters of a ML/DL model.

Component

Component is an implementation of the task in our pipeline. Components in TFX are composed of

- Component specification: This defines the component’s input and output artifacts, and component’s parameters.

- Executor: This implements the real work of a step in the pipeline.

- Component interface: This packages the component specification and executor for use in a pipeline. (This is not very clear.)

Questions

- Where does the component get run?

- Do components run in the same environment? Same OS and same dependencies?

- What if each component requires different dependencies?

Pipeline

TensorFlow says that a TFX pipeline is a portable implementation of an ML workflow, as it can be run on different ochestrators, such as: Apache Airflow, Apache Beam, and Kubeflow Pipelines.

First, we build a pipeline, which is of type tfx.orchestration.pipeline.Pipeline:

from tfx.orchestration import pipeline

def _create_pipeline() -> pipeline.Pipeline:

"""

"""

pass

To select a different ochestration tool, we need to import from tfx.orchestration module.

# Airflow

from tfx.orchestration.airflow.airflow_dag_runner import AirflowDagRunner

from tfx.orchestration.airflow.airflow_dag_runner import AirflowPipelineConfig

DAG = AirflowDagRunner(AirflowPipelineConfig()).run(

_create_pipeline()

)

# Kubeflow

from tfx.orchestration.kubeflow import kubeflow_dag_runner

kubeflow_dag_runner.KubeflowDagRunner().run(

create_pipeline()

)

4. TFX Custom Components

Understanding the custom components will get us far! Refer to TensorFlow’s article here.

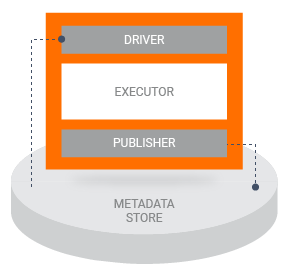

TFX components at runtime

When a pipeline runs a TFX component, the component is executed in three phases:

- First, the Driver uses the component specification to retrieve the required artifacts from the metadata store and pass them into the component.

- Next, the Executor performs the component’s work.

- Then the Publisher uses the component specification and the results from the executor to store the component’s outputs in the metadata store.

Types of custom components

-

Python function-based components

- The specification is completely defined in the Python code.

- The function’s arguments with type annotations describe input artifact, output artifact, and parameters.

- The function’s body defines the component’s executor.

- The component interface is dedined by adding

@componentdecorator.

@component def MyComponent( model: InputArtifact[Model], output: OutputArtifact[Model], threshold: Parameter[int] = 10 ) -> OutputDict(accuracy=float): """ """ pass -

Container-based components

- This is suitable for building a component with custom runtime environment and dependencies.

-

Fully custom components

- This is for building a component that is not in the built-in TFX standard components.

- It lets us build a component by implementing a custom component specification, executor, and component interface classes.

5. Code

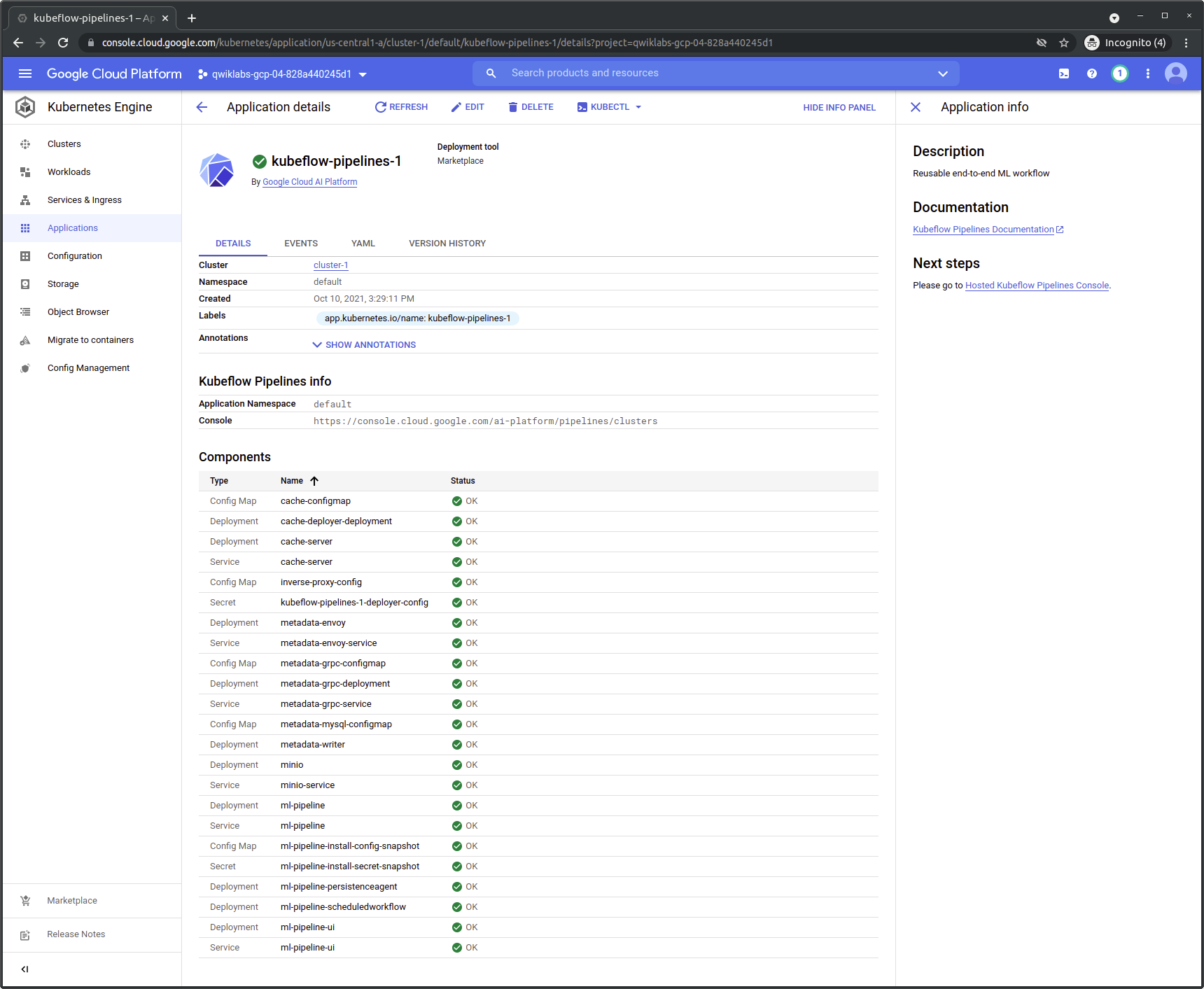

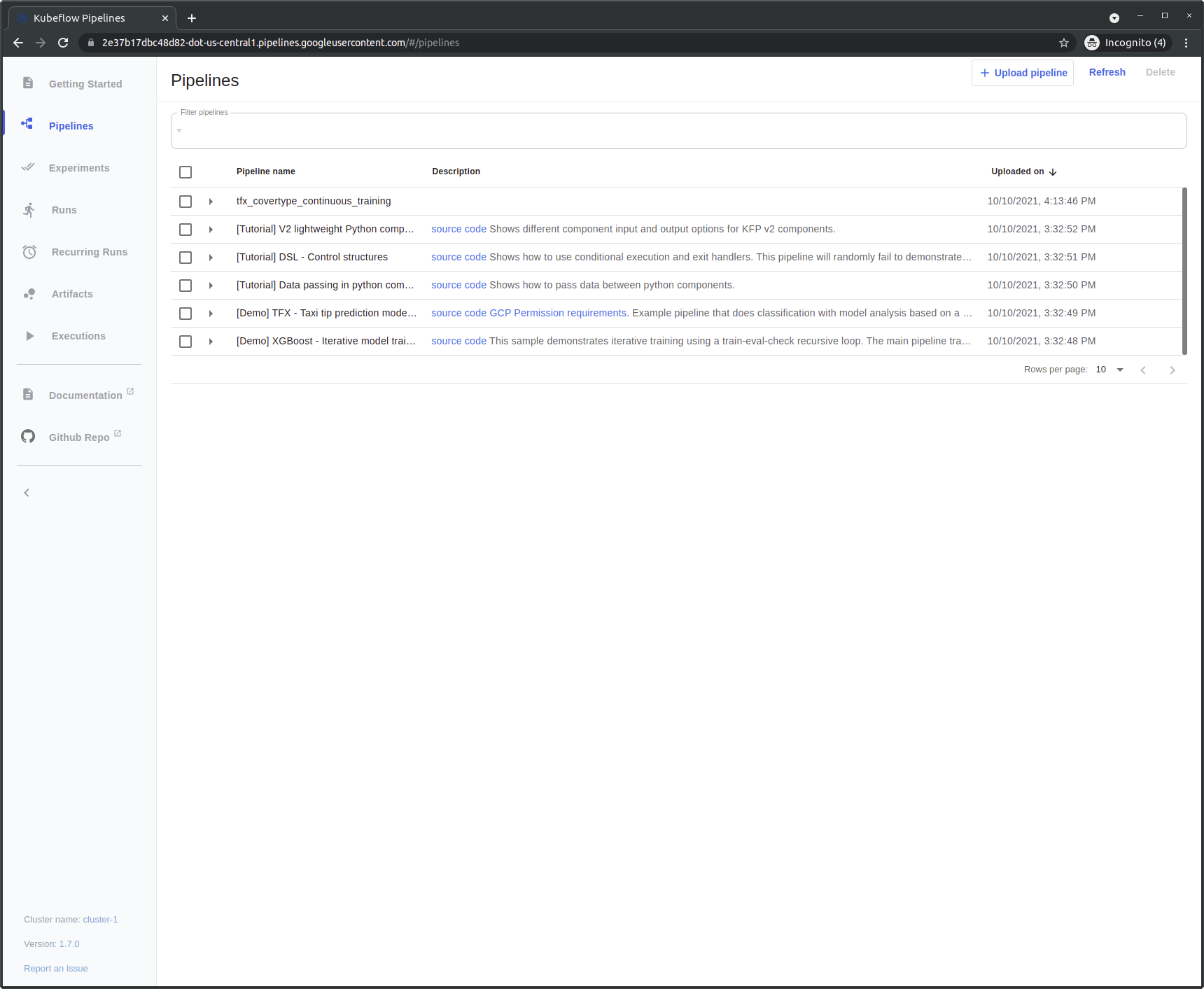

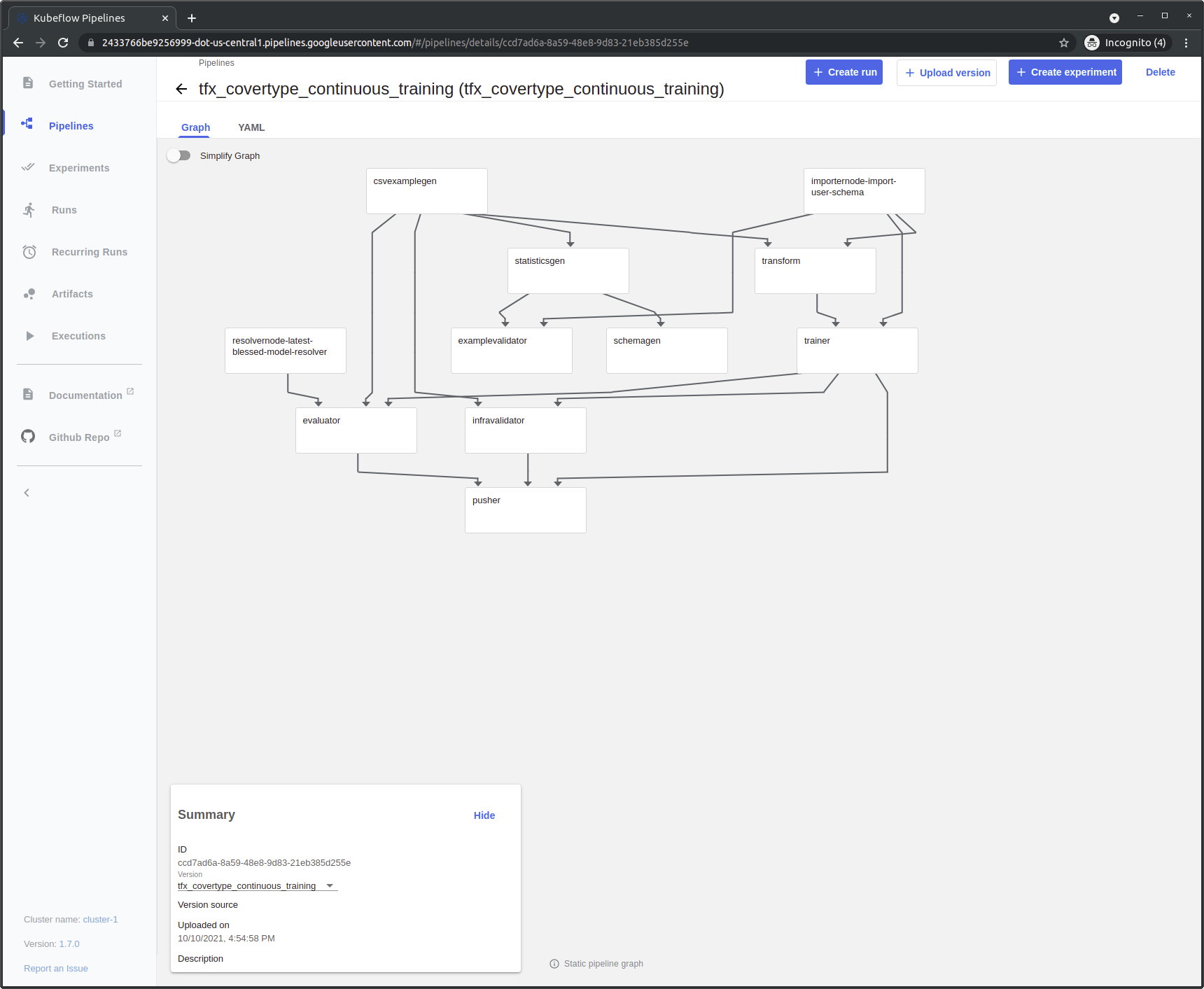

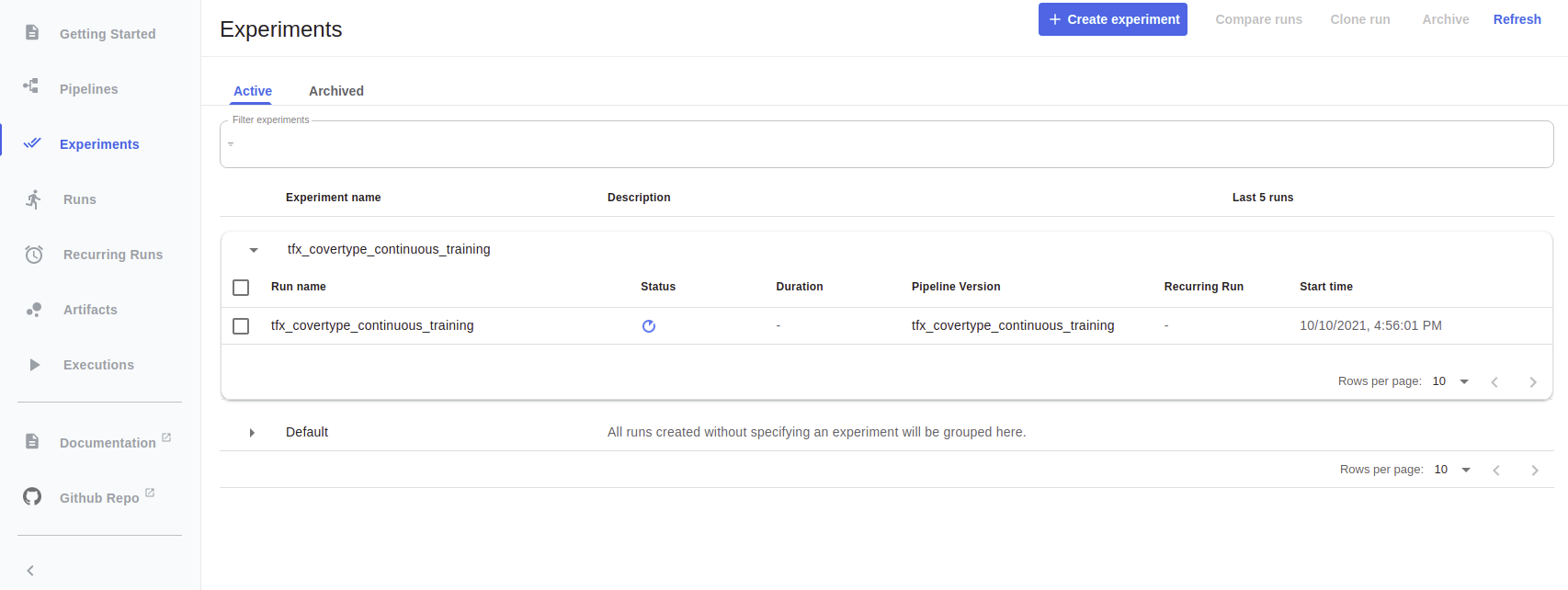

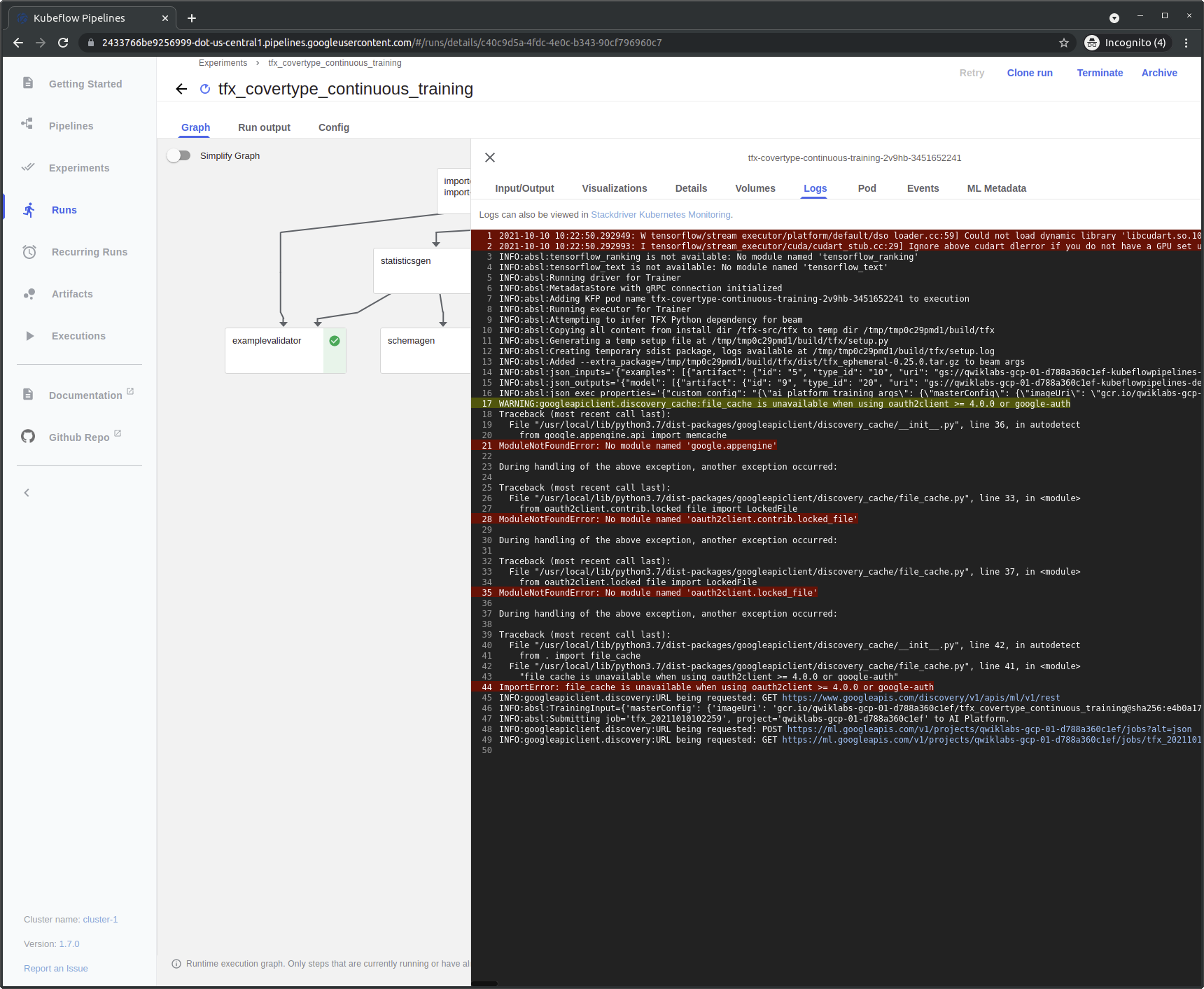

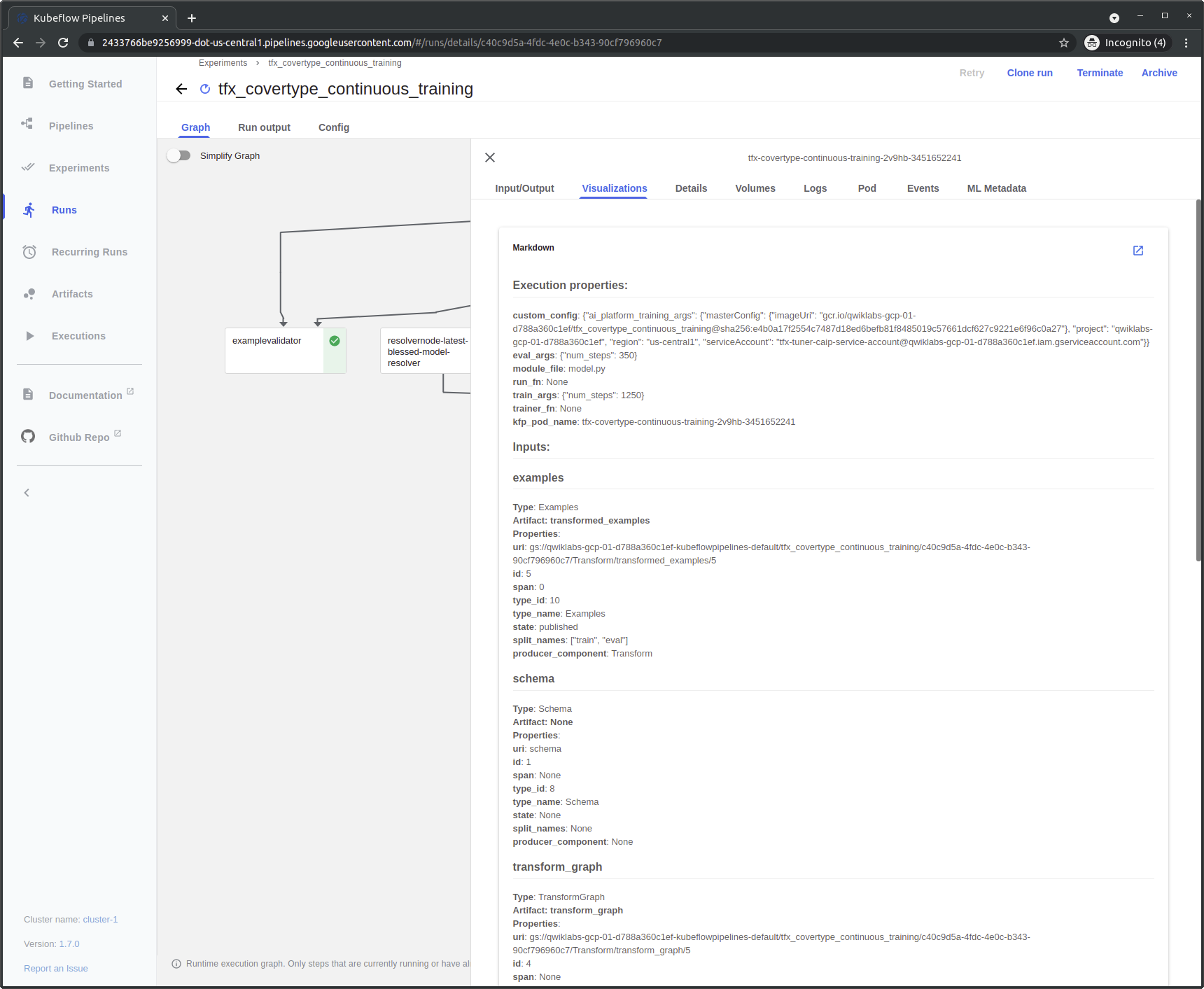

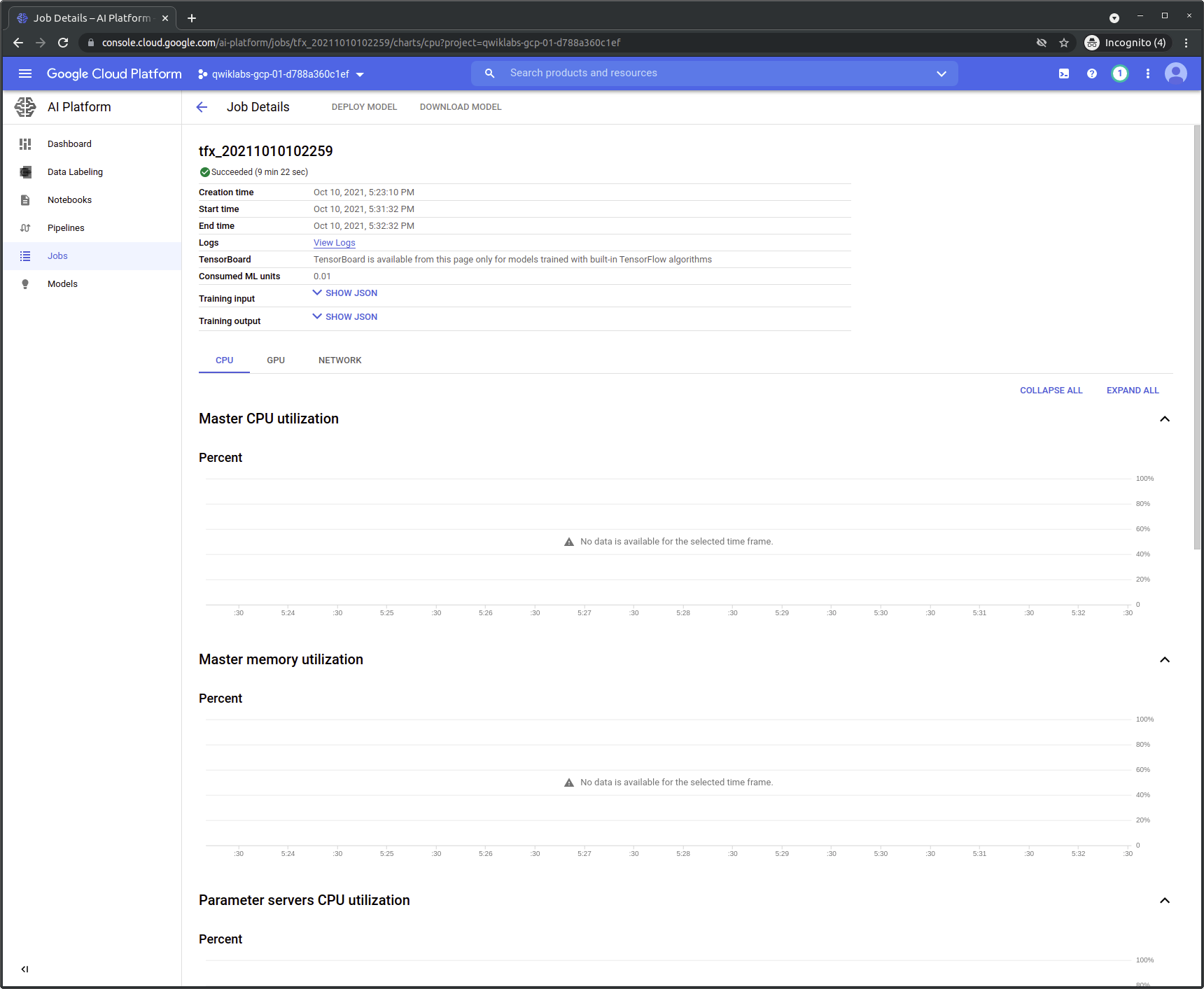

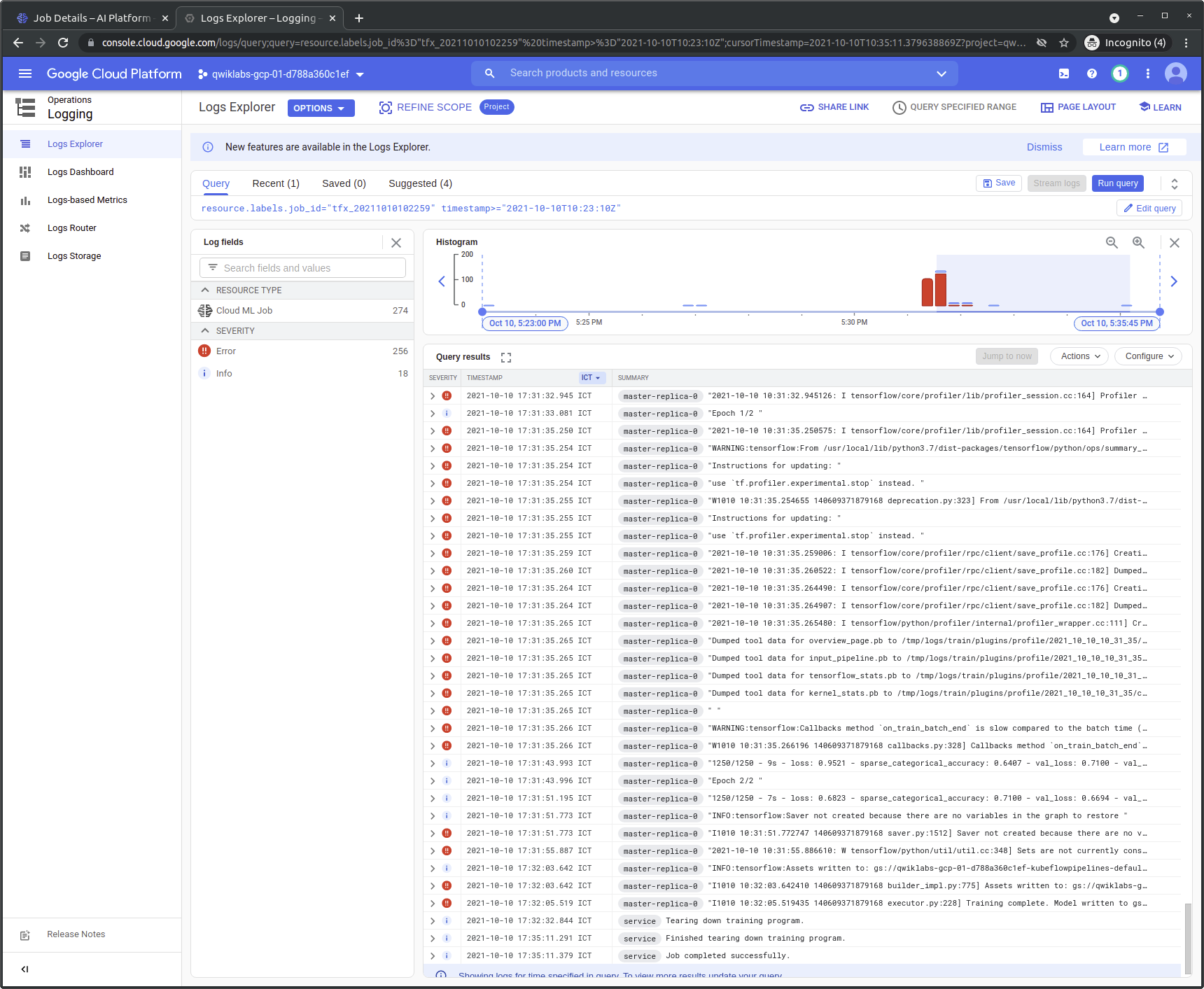

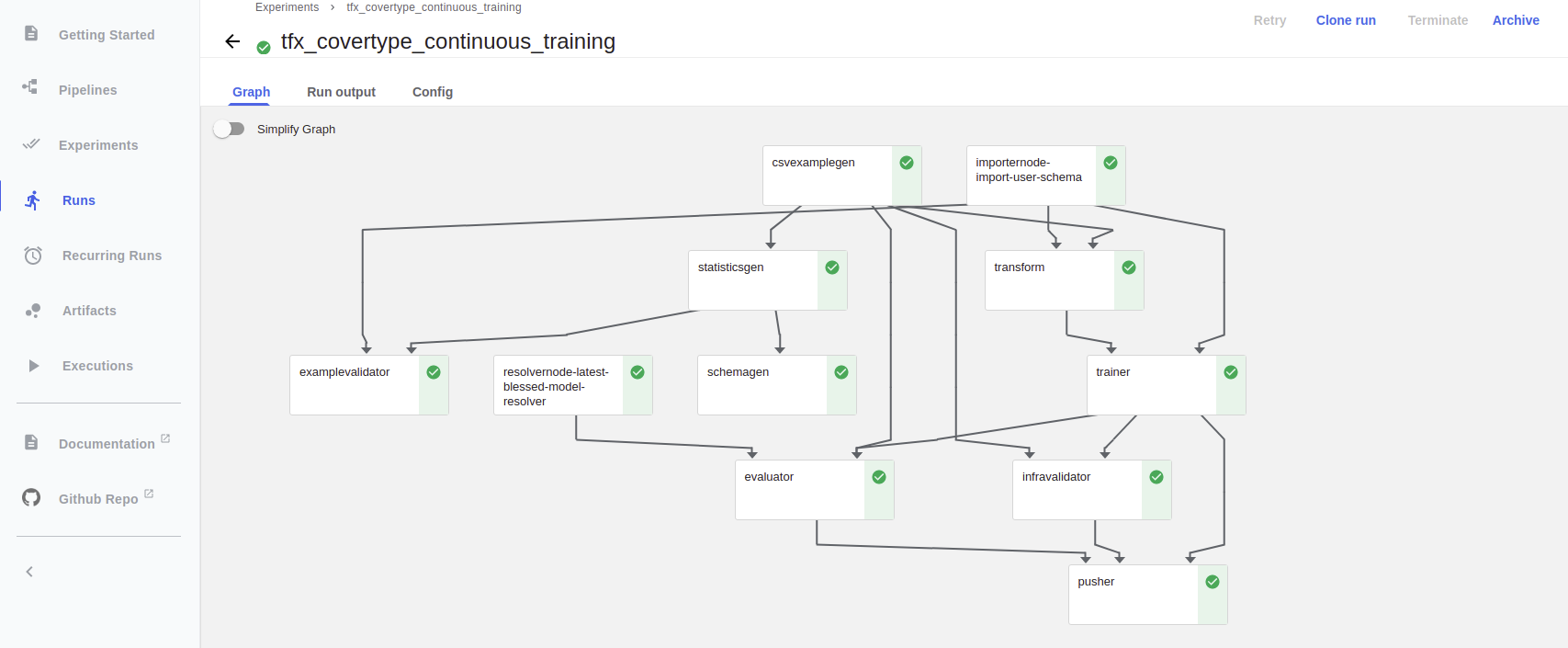

6. Pipeline Dashboard

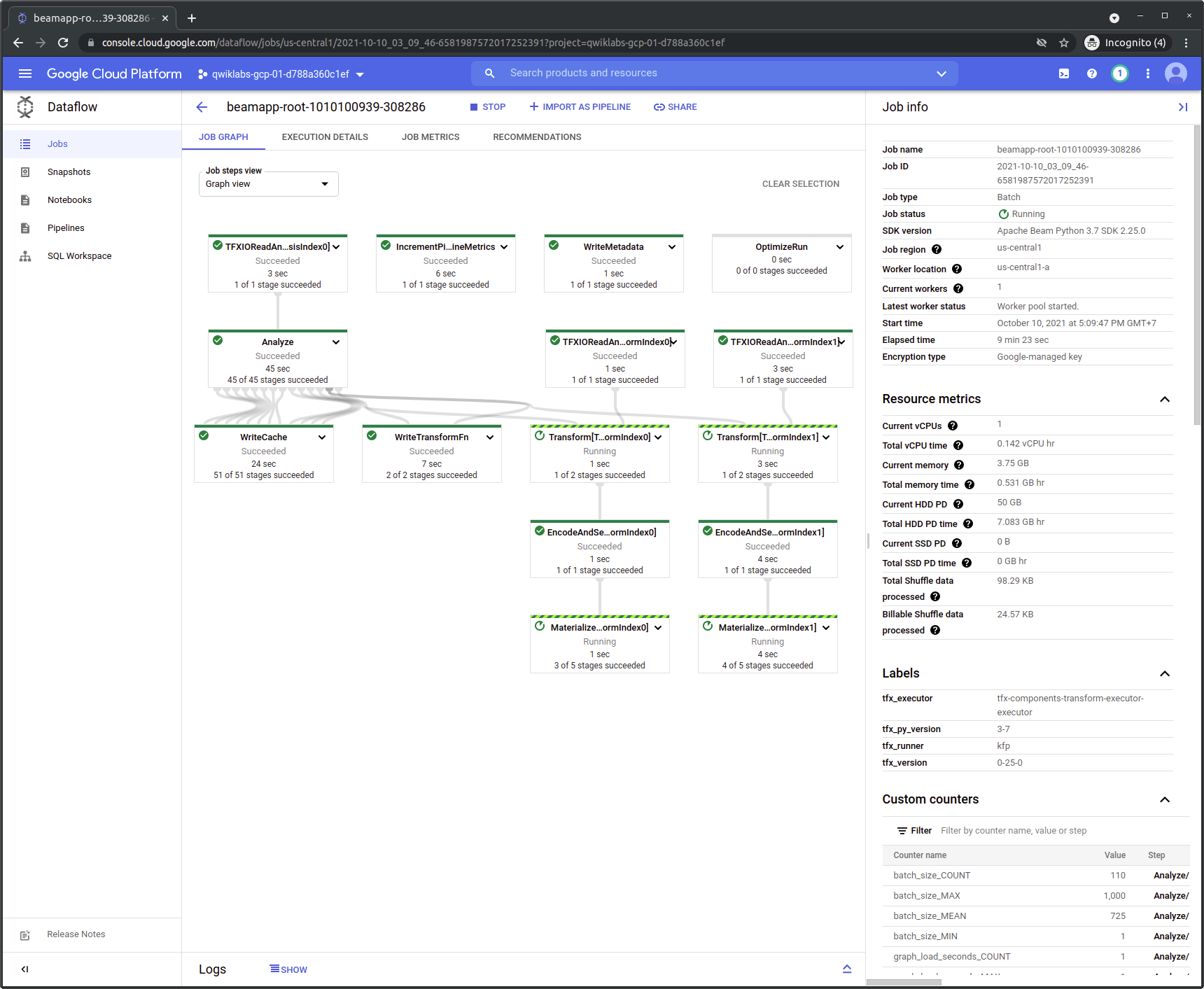

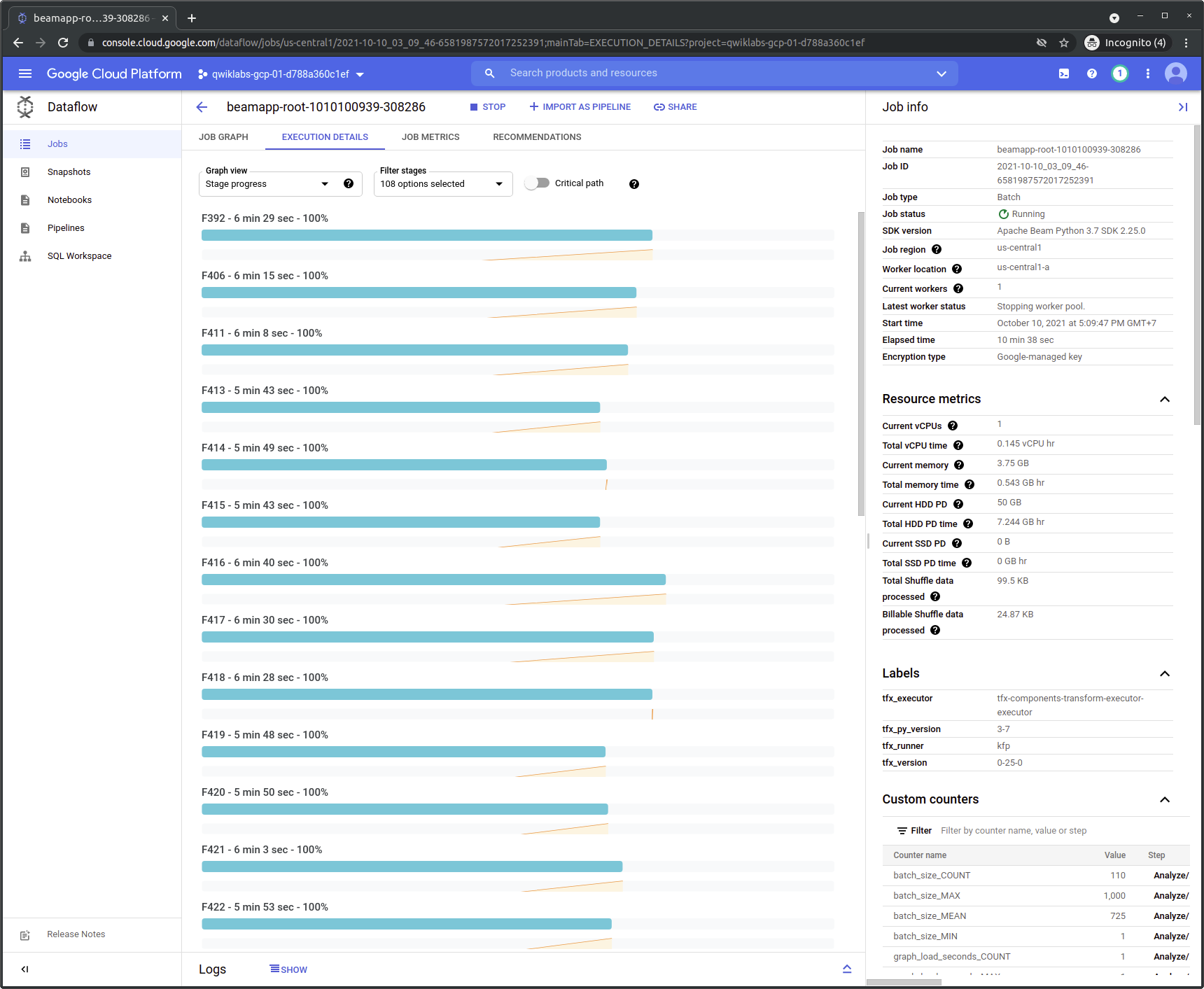

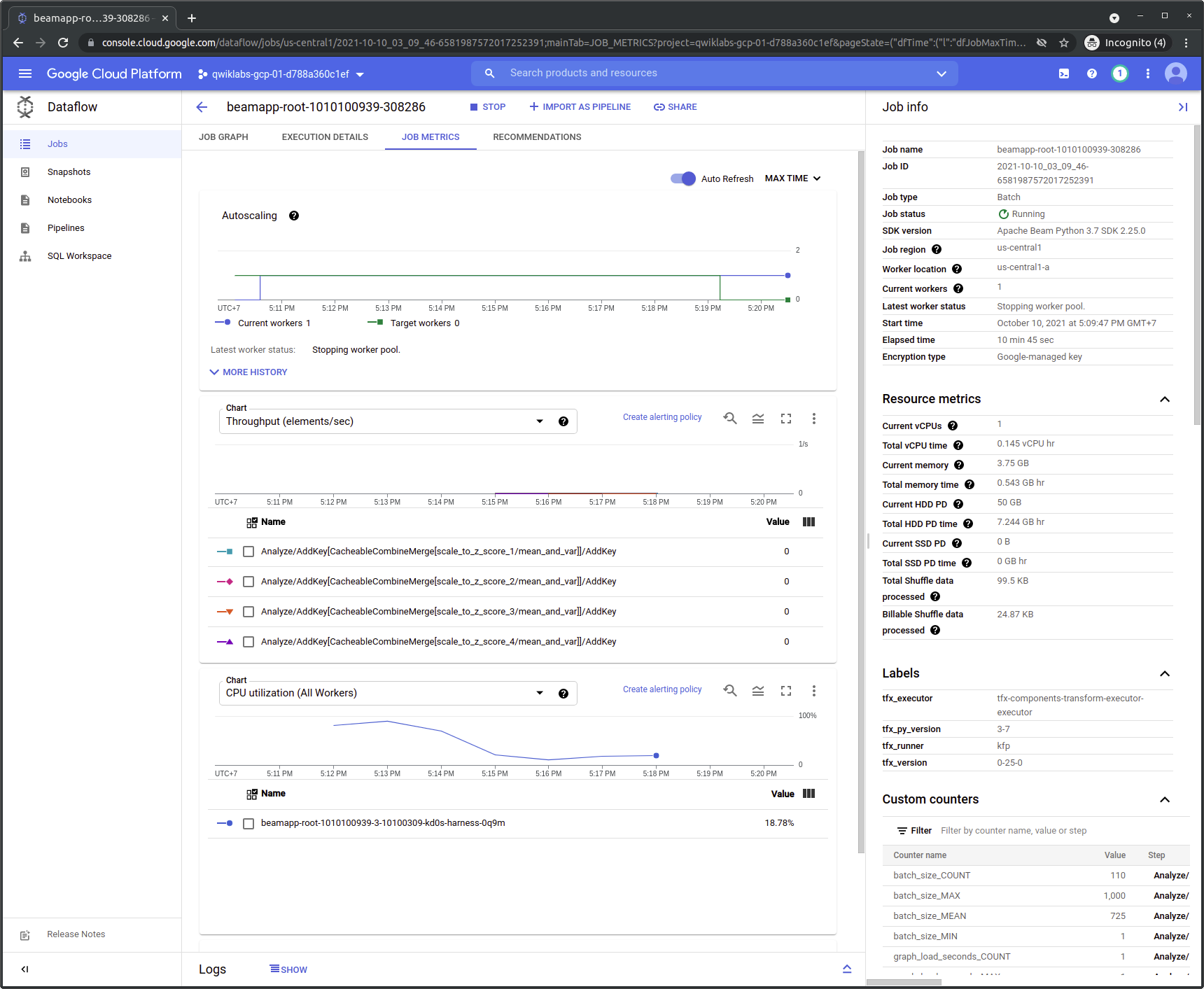

7. Dataflow

My Thoughts

-

TFX seems to be built around TensorFlow. Not very sure if it’s gonna work with other DL/ML libraries without heavily modifying the TFX components. But if we are in a Google Cloud/TensorFlow ecosystem, stick with it!.

-

Unlike TFX, MLFlow seems to be more general and more open to other DL/ML libraries.